JUST TYPED ALONG WITH THE SESSION SO EVERYTHING IS STILL PRETTY MESSY, NO LINKS INCLUDED AND POSSIBLE FAULTS ARE MY OWN RATHER THAN THE SPEAKERS,…

Controlling a Physical Model with a 2D Force Matrix

Randy Jones, Andrew Schloss

Mystic center. First goal was intimate control of percussion synthesis. A musical instrument should be alive in the hands of the musician. What comes between the notes in addition to the notes itself. Other goal is about sonic exploration.

2d waveguide mesh was first described by van Duyne & Smith. WaveguideMesh is implemented as 3×3 convolution in a Max/msp/jitter object.

2d Force Matrix takes care of the input to the waveguide mesh. excitation as damping at the same time. Two sources of data go into the matrix. Surface Data as well as multitouch data from multitouch controllers. There is a continuous sampling of surface pressure. Spatially as well as temporally.

Concerts: Schloss, Duran, Mitri Trio: EMF, Real Art Ways & Schloss, Neto, Mitri Trio: CCRNA

Current Goals: Realistic filtering (nonlinear hammer), more aspects of drum modeling, increase controller sampling rate.

PHYSMISM: A control interface for creative exploration of physical models

Niels Boettcher, Steven Gelineck, Stefania Serafin

Medialogi, Aalborg university, Copenhagen, Denmark

Motivation: physical models are oldschool and they sound like shit,.. it was a challenge

What are the possibilities and boundaries,.. focus on completely new sounds

Design criteria: many different models, replica models, sounding like original, extended replica models, hybrid models (models + instrunments), physical interface, unusual interface that the audience can understand, interface should be musically and have a lot of possibilities

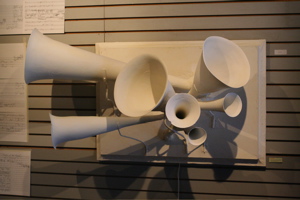

4 different models implemented: flute model, PHISM model, Friction model, drum model

crank: prticle model a crank controlling a PHISM particle model: rotation speed is mount of beams

drumpads, flute like interface controlling tube/string model (extended Karplus Strong)

Slider – Friction: horizontal and vertical slider with pressure sensor controlling friction model

2 control stations with 4 parameters, patching system combining the 4 different modes: you can take the output of one model into the other model

mini sequencer that is not innovative at all

Physical models and musical controllers – designing a novel electronic percussion instrument

Katarzyna Chuchacz, Sile O’Modhrain, Roger Woods

Sonic Arts Research Centre, Queen’s University in Belfast

Existing Eletronic Percussion Instruments: Buchla Thunder, Korg Wavedrum, ETabla

Limitations: complexity, extend of control,.. especially difficult are the modeling of large size instruments and the nonlinear sounds

Creation of realtime plate based electronic percussion instruments

high quality sound, range of modeled resonators

Finite difference schemes: problems: huge computational requirement, memory access: possibilities of real-tie performance. Possible are the recreation of large instruments

Solution: FPGA Hardware Implementation: possible to program the architecture of your system: full flexibility

Why? –> more processing power, parallelism in the algorithm, higher memory access bandwidth, flexibility in terms of onterfacing to a range of sensors.

Now it runs “faster than realtime”

Parameter Space: grid size, Plate size , sample frequency

Parameters mapping: hardware mapping parameters, sound synthesis parameters

Sound world of the model

opportunity to drive the model in a number of ways: many parameters fully open,… possibility to go beyond the constraints existing in acoustic systems

design approach is based on connecting to the sample of the model and creating a successful interface

What are the range of techniques of a real percussion player?

future work concentrated on observation of real percussionists, sensor system specifications should follow from this

A Force Sensitive Multi-touch Array Supporting Multiple 2-D Control Structures

David Wessel, Rimas Avizienis Avizienis, Matthew Wright

Gestures and Signals: very high rate of motion capture if you work with percussion sensors. Interlinks VersaPad Semi-conductive Touch-pad.

Multi-touch is the big rage,. we should get the data-rate up in order to be able to process multi-touch high definition controllers.

Most compact layout to get all fingers on the pad. Not really multi-touch but several versapads next to each other. New one has 32 pads.

Data acquisition hardware” daughter-boards consist of 4 to 6 sensors each, analog conditioning, multichannel A/D

72 or 96 variables: most efficient way is to use just 72 audio channels and only convert them if necessary.

Only a midi input, no output. Just to turn the midi into sample signals of the audio.

Yes: reading 147456000 bits per sec is cheaper than demultiplexing, up-sampling and converting to floating-point on the host CPU.

pressure profiles of short taps that percussionists use: 9ms, 14 ms, 18ms, etc.

Pressure profiles of short taps asks for substantially different attacks and curves even in the first milliseconds.

Zstretch: A Stretchy Fabric Music Controller

Angela Chang, Hiroshi Ishii, Joe Paradiso

Starting point from our hand” our hands posess rich capabilities of interacting with materials

Related works: most lack haptic feedback which alters the control loop.

Musical fabrics: mostly about localised places for touching the fabric rather than supporting the many gestures of our hands.

They should support 0 to 20 Newtons: that means it should be robust / Haptic expression / the fabric should guide the interaction

Resistive strech sensors are sown into the lycra fabric

Mechanical: a tabletop frame that holds te fabric but allows access to all sides of the fabric

Robustness issues: noise from mechanical contacts, drift of threads resistance over time, bouncebacks after a hard pull, fabric fatigue from wear and tear.

Software Mapping playback speed (pitch) and volume,.. interrupting zing noise and volume control of it (later was considered to be annoying)

Conclusion: scalable, no electronics in interaction, supports the interaction with the hands, it’s about material properties. Now better mappings and better materials

Oculog: Playing with Eye Movements

Juno Kim, Greg Schiemer, Terumi Narushima

From the Sonic Arts Research Network Faculty of Creative arts, University of Wollongong, Australia

Initially for clinical use, adapted as an expressive performance interface. First performance will be held in July 2007

Interface: firewire camera on snow goggles. Camera mounted to capture the eye movements. Up to 120 fps, in performance 30 fps,

control either voluntary or involuntary. Eye movement is mapped to MIDI, implemented using STK.

5 channels of information: horizontal position, vertical position, etc… CHECK

Active listening to a virtual orchestra through an expressive gestural interface: The Orchestra…

Antonio Camurri, Corrado Canepa, Gualtiero Volpe

University of Genova,InfoMus Lab

Embodied Active Listening: enabling to interactively operate on musical content by modifying it in realtime

full body movement and gesture

focus on: high level expressive qualities of movement and gesture, cross- and multi-modal techniques\\the result is embodied control of….

actively explore the orchestral play.

Multitrack Audio inputs. You can operate on each single channel with realtime mixing.

input with video camera and other possible sensors,… than tracking and extraction of specific features, modes for interaction with space and possible visual feedback

interaction with space: 2d potential functions superimposed onto physical space, single instruments and each function applied to individual instruments. You can change the parameters of the functions in real time in the space

Public Installations: they are aiming for a natural as possible interface (ambient design, disappearance of technology for non-expert users)